Web Scraping, Natural Language Processing, and Classification on Reddit

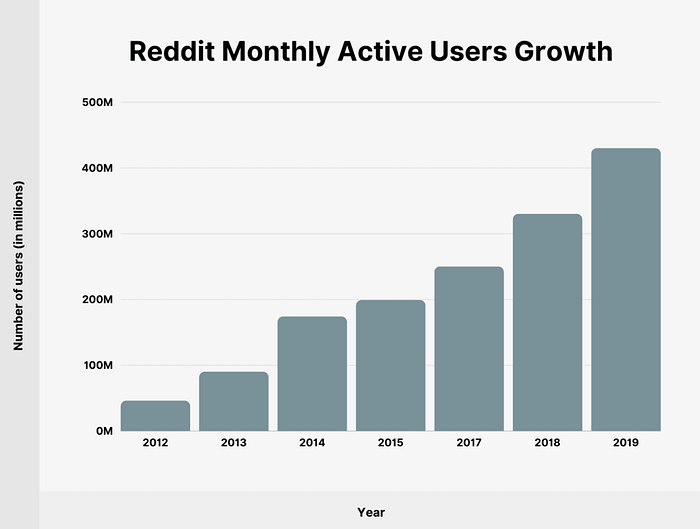

Despite the fact that online forums, a once essential part of internet culture, have all but died out, it seems that reddit it having no issues remaining relevant. This could be due to the fact that reddit is effectively an aggregate

of all forums (or subreddits) that anyone could potentially have an interest in. All it takes is an account and you can create a forum regarding any topic you’d like. This is great for creativity and the fostering of more niche communities, but this lack of centralization can lead to redundancies of the created subreddits. At some point, somebody is bound to have an idea for a subreddit that is nearly exactly the same as an already existing one. This happens frequently, although the lines between subbranches of the same topic and simply a rephrasing of one are blurry. Would we even be able to tell which subreddit a given post came from if there existed many communities addressing the topic of said post? That’s what I set out to discover. I wanted to look at a topic that I had great interest in, travelling, and find a community of like-minded people. It didn’t take long to come across r/travel and r/solotravel, both communities with millions of members that I figured would different noticeably in content. After exploring the data of the posts on either of these subreddits, I found mixed results.

To complete this project, I needed to utilize the PushShift API to perform my web-scraping. Over several days, I was able to pull roughly 100,000 posts from either subreddit using the following code:

import pandas as pd

import time

import datetime as dt

import requestss_type = 'submission'

subreddit = 'travel'

unix_time_stamp = 1626554528 # the time I started my pulls

column_names = ['title', 'author', 'created_utc', 'subreddit', 'selftext']

filler_row = [['NA', 'NA', unix_time_stamp, 'NA', 'NA']]# initializing DataFrame

full_df = pd.DataFrame(columns = column_names, data = filler_row)

full_df.to_csv('./travel_posts.csv', index = False)def pushshift_query(full_df_path, subreddit, s_type, iters=3):

full_df = pd.read_csv(full_df_path)

for pull in range(iters):

url = f"https://api.pushshift.io/reddit/search/{s_type}/?subreddit={subreddit}&before={full_df['created_utc'].min()}&size=100"

# for API errors

try:

res = requests.get(url)

except:

continue

if res.status_code == 200:

post_list = res.json()['data']

temp_df = pd.DataFrame(post_list)[column_names]

full_df = pd.concat([full_df, temp_df])

print(full_df.shape)

print(full_df.nunique())

print('waiting until next pull...')

print()

time.sleep(5) else:

continue

full_df.to_csv(full_df_path, index=False)pushshift_query('./travel_posts.csv', 'travel', 'submission', iters = 1000)pushshift_query('./solotravel_posts.csv', 'solotravel', 'submission', iters = 1000)

By the time the data was fully read in, many of the posts had already been deleted or had issues, so the majority of them had too many null values to work with. I ended up using 20,000 posts from each subreddit to create my models. I then used the nltk.tokenize.word_tokenize(), nltk.stem.porter.PorterStemmer() , and nltk.corpus.stopwords.words() functions to clean up my data for vectorization. I only examined posts in the English language and filtered out anything not in the 26 letter English alphabet. I used sklearn.feature_extraction.text.TfidVectorizer since sklearn.feature_extraction.text.CountVectorizer gave me less accurate models.

I tried several types of modelling to try and increase my accuracy as much as possible. Accuracy was the most important metric to me here because the type of misclassification wasn’t important to my goal, which was to correctly classify as many posts as possible into either r/travel or r/solotravel.

I started out with a basic Bernoulli Naïve Bayes model (using CountVectorizer) which gave me a training and testing score of 0.680 and 0.656, respectively. Our variance wasn’t bad but we had only gotten our accuracy above 15 points higher than a null model with a score of 50%. I then switched to a TfidVectorizer and a Multinomial Naïve Bayes model. Incorporating a pipeline and a cross-validated grid search over several parameters of maximum features and n-gram range, I was able to boost my training and testing scores up to 0.763 and 0.741. So at this point we were at least 50% better than simply guessing.

Many more models were attempted, included a Support Vector Machine (73% test score), an AdaBoost Classifier (74% test score), and a Random Forest Classifier (70% test score but >99% train score). No matter which parameters I chose or the way I attempted to organize the data, I couldn’t seem to break that 75% threshold. This isn’t to say that it isn’t possible. I’m sure that stronger models could be created using more sophisticated neural networks, a more specified NLP algorithm, etc., but we’re dealing with human generated input here. There’s only so much a computer can do to tell the difference between hastily-written internet posts by people with varying levels of mutual language comprehension (especially given the domain, that is, people who travel). I doubt even the average human being would be able to parse these posts between either subreddit given the similar topic nature. So, for now, it seems like a 75% accuracy rating was going to be our cap.

One final note: The world “solo” appeared in 782 posts (3.91%) of r/travel, while 9647 of posts (48.24%) in r/solotravel had the same word. This fact could likely be coerced into a model to get a higher accuracy score, although it would nearly guarantee that a post in r/travel containing the word “solo” would be misclassified. In the end, I chose not to use this fact because it felt like cheating.